Mid May 2023, a warning popped up in Microsoft Message Center regarding "Non-Compliant Power Automate Flows" - soon after the message however disappeared so this got probably missed by the majority of Dataverse and Dynamics 365 admins.

This however raised some concerns in the broader Microsoft community ( see Will Power Automate enforcement licensing kill your flows? and Upcoming licensing enforcement in Power Automate explained ).

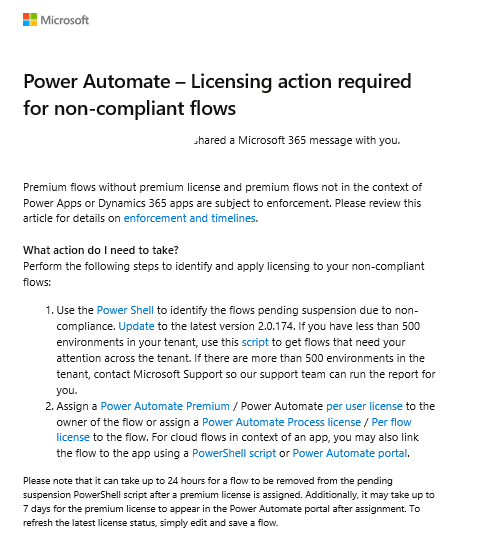

To be honest I did not pay a lot of attention since the message apparently vanished in thin air and after consultation with Microsoft support they said that this message was sent prematurely. But then, end of October another warning popped up.

So it seems that Microsoft is finally cracking down on Power Automate flows which are not associated with a properly licensed user for premium connectors or Power Automate flows not directly linked to a Power App. When you built your own model-driven app on top of Dynamics 365 Customer Engagement (CE) which uses Power Automate flows, you will need to associate the Power Automate Flow with with your new app.

There is a PowerShell script to identify the flows which at risk to be turned off across your tenant - see I have many environments - how can I get the flows that need my attention across tenants in the Power Automate Licensing FAQ - which uses the Get-AdminFlowAtRiskOfSuspension cmdlet

The Get-AdminFlowAtRiskOfSuspension cmdlet is part of a separate PowerShell module which you can install using Install-Module -Name Microsoft.PowerApps.Administration.PowerShell. It will run a scan of your environments and outline

Check out Associate flows with apps - Power Automate | Microsoft Learn on how you need to link up a flow with an app (see below screenshot on where to do this in the Power Automate flow detail screen). If you make this change on a flow which is a part of a solution, then the associations will be part of the solution file and can be transported cross environments.

Related articles/blog posts: